Backing up data from Google Search Console (GSC) is critical for digital marketers and SEO specialists as GSC data provides key insights into websites performance, but unfortunately, Google only retains 16 months of this data. To ensure one never losed access to critical historical data, setting up an automated backup process using Google Cloud, Docker, and Python is a powerful solution. In this guide, we’ll show you the process of configuring Google Search Console backups with a Python script, Docker container, and Google Cloud deployment.

tl;dr: By using Docker, Google Cloud, and Python, you can set up an automated backup solution for your Google Search Console data. With Cloud Run, you eliminate the need for traditional server management, while Google Cloud Storage ensures that your backup data is secure and easily accessible. This solution will save you hours of work and prevent data loss, allowing you to focus on growing your business.

1. To start with, what are the requirements

- A Google Cloud Account and Google Cloud SDK

- Google Search Console Access

- Docker

2. Setting Up the Python Script

The core of this backup process is a Python script that pulls data from GSC. You can use the Google API client to access the Search Console data and then store it in a CSV format.

- API Authentication: The script uses IAM credentials to authenticate, meaning you don’t need to manage service account credentials manually.

- Fetching GSC Data: The script fetches data like clicks, impressions, average position, and CTR from Google Search Console for the last 30 days.

- Data Export: It then converts the data to a CSV file for storage.

- Cloud Storage Upload: The script uploads the CSV file to Google Cloud Storage, where it can be accessed later.

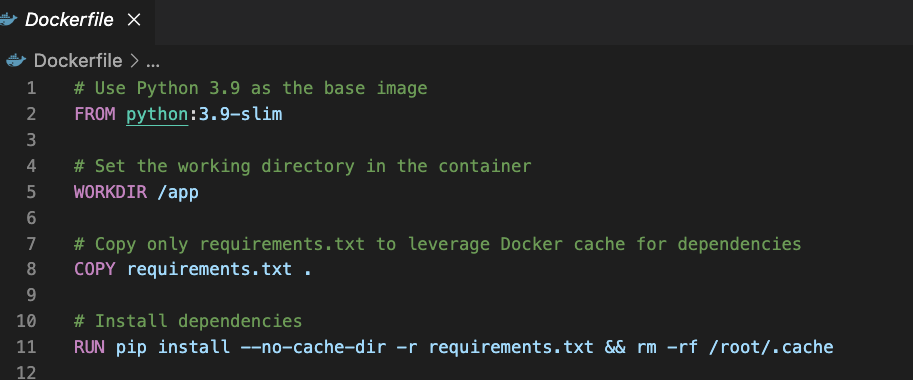

3. Dockerfile Setup

To run the Python script on Google Cloud, you’ll need a Dockerfile that defines the environment for the application. Docker allows you to “containerize” the application, making it easy to deploy to Google Cloud.

The requirements.txt file includes necessary libraries such as google-api-python-client, google-auth, and pandas.

4. Setting Up Google Cloud and IAM

- Enable Google Cloud APIs: In your Google Cloud console, enable the Google Search Console API and Google Cloud Storage API.

- Create a Service Account: Set up a service account with the required permissions to access Google Search Console data and upload to Cloud Storage. Assign roles like Viewer for Search Console and Storage Admin for the cloud storage bucket.

- Cloud Storage Bucket: Create a Google Cloud Storage bucket where the backup files will be stored. Make sure the bucket is private by default.

5. Deploying to Google Cloud Run

To automate and deploy the Python script, use Google Cloud Run which allows you to run “containerized” applications in a environment with servers.

- Push Docker Image: Build the Docker image and push it to Google Container Registry.

- Deploy to Cloud Run: Deploy the containerized application to Cloud Run.

Once deployed, Cloud Run will run your Python script on a schedule, automatically pulling the data from GSC and uploading it to your private Google Cloud Storage bucket.

6. Automating the Process

To ensure that the backup runs periodically, you can trigger it using Google Cloud Scheduler. Set up a cron job to invoke the Cloud Run service every day or week to keep your data backed up automatically.

Get in touch with us and we can arrange this service for you.

Alex is an experienced SEO consultant with over 14 years of working with global brands like Montblanc, Ricoh, Rogue, Gropius Bau and Spartoo. With a focus on data-driven strategies, Alex helps businesses grow their online presence and optimise SEO efforts.

After working in-house as Head of SEO at Spreadshirt, he now works independently, supporting clients globally with a focus on digital transformation through SEO.

He holds an MBA and has completed a Data Science certification, bringing strong analytical skills to SEO. With experience in web development and Scrum methodologies, they excel at collaborating with cross-functional teams to implement scalable digital strategies.

Outside work, he loves sport: running, tennis and swimming in particular!