Introduction

Understanding bot traffic is essential for optimizing website performance, improving security, and enhancing SEO strategy. This project provides a Python script to analyze web server logs and categorize bot traffic, with a focus on search engine crawlers. It leverages the pandas library for data manipulation and analysis.

Why Analyze Bot Traffic?

Bot traffic plays a crucial role in indexing your website, but excessive or malicious bot activity can negatively impact performance. This script helps you:

- Identify different types of bots accessing your site.

- Analyze their behavior.

- Take appropriate actions based on insights.

Getting Started

Prerequisites

- Python 3.7 or later.

- Web server log files stored in a

logsdirectory at the project root. - Log files must follow a standard format (e.g., Combined Log Format).

Configuring the Script

Modify the log_files list in the if __name__ == "__main__": block to specify your log files:

if __name__ == "__main__":

log_files = ["logs/your_log_file.log", "logs/another_log_file.log"] # Add your log file paths

df = analyze_logs(log_files)

summarize_analysis(df)

Code Structure and Explanation

The script is organized into key functions:

1. Helper Functions

is_google_ip(ip)

- Purpose: Checks if an IP belongs to Google’s crawler network.

- Implementation: Uses

ipaddressto validate if the IP falls within Googlebot’s known ranges.

import ipaddress

def is_google_ip(ip):

try:

google_ranges = [

"66.249.64.0/19", "66.249.80.0/20", "64.233.160.0/19", "216.239.32.0/19",

"2001:4860:4801::/48", "2404:6800:4003::/48", "2607:f8b0:4003::/48", "2800:3f0:4003::/48"

]

ip_obj = ipaddress.ip_address(ip)

return any(ip_obj in ipaddress.ip_network(net) for net in google_ranges)

except:

return False

Search Engine Identifier

- Purpose: Identifies the search engine based on the User-Agent string.

- Implementation: Uses regular expressions to match known bot patterns.

import re

def identify_search_engine(user_agent):

search_engines = {

'Google': ['Googlebot', 'AdsBot-Google'],

'Bing': ['bingbot'],

'DuckDuckGo': ['DuckDuckBot'],

'Yandex': ['YandexBot']

}

for engine, patterns in search_engines.items():

if any(re.search(pattern, user_agent, re.IGNORECASE) for pattern in patterns):

return engine

return 'Other'

2. Main Analysis Function

analyze_logs(log_files)

- Purpose: Parses web server logs to extract key information.

- Implementation: Reads logs line-by-line, extracts IPs, timestamps, URLs, and user agents, then classifies search engine bots.

import pandas as pd

def analyze_logs(log_files):

records = []

for log_file in log_files:

with open(log_file, 'r') as f:

for line in f:

pattern = r'(\d+\.\d+\.\d+\.\d+).*?\[(.*?)\].*?"(\w+) (.*?) HTTP.*?" (\d+) .*?"(.*?)" "(.*?)"'

match = re.search(pattern, line)

if match:

ip, datetime, method, url, status, referer, user_agent = match.groups()

search_engine = identify_search_engine(user_agent)

records.append({

'ip': ip, 'datetime': datetime, 'method': method,

'url': url, 'status': int(status), 'referer': referer,

'user_agent': user_agent, 'search_engine': search_engine

})

df = pd.DataFrame(records)

if not df.empty:

df['datetime'] = pd.to_datetime(df['datetime'], format="%d/%b/%Y:%H:%M:%S", errors='coerce')

return df

3. Summarization and Output

summarize_analysis(df)

- Purpose: Provides a high-level summary of search engine bot activity.

def summarize_analysis(df):

if df.empty:

print("No data to summarize.")

return

print("\n--- Summary of Log Analysis ---")

print("\nTop Search Engines:")

print(df['search_engine'].value_counts())

4. Main Execution

- Purpose: Runs the analysis workflow.

if __name__ == "__main__":

log_files = ["logs/your_log_file.log"]

df = analyze_logs(log_files)

summarize_analysis(df)

Running the Script

- Open a terminal.

- Navigate to the project directory.

- Execute:

python analyze_logs.py

Extending the Script

Here are ways to improve the script:

More Detailed Analysis

- Calculate status code distributions per search engine.

- Identify top pages crawled by different bots.

- Filter out static assets (CSS, JavaScript, images).

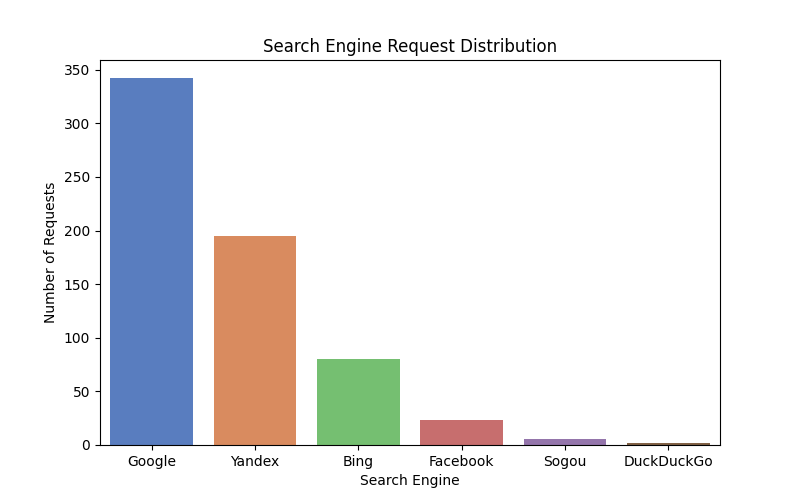

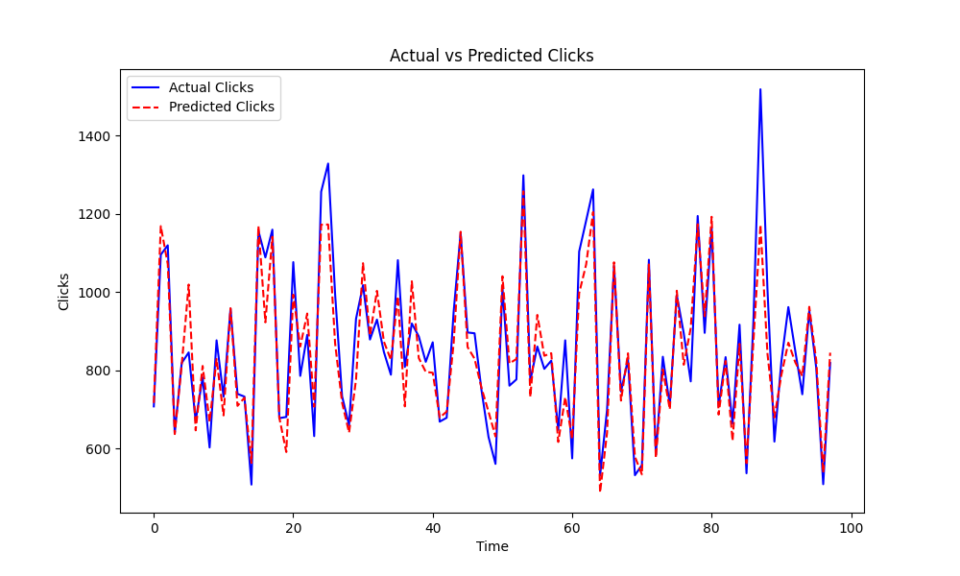

Data Visualization

- Use

matplotlibandseabornto generate insights through charts. - Create automated reports in HTML or PDF.

GeoIP Analysis

- Integrate a GeoIP library to track bot locations.

Advanced Bot Detection

- Analyze request patterns and detect anomalies in user agents.

Database Integration

- Store parsed log data in SQLite or PostgreSQL for efficient querying.

Conclusion

This Python script provides a powerful way to analyze bot traffic from web server logs. By identifying and categorizing search engine bots, it helps webmasters optimize SEO strategies and website performance. With additional enhancements, it can serve as a robust tool for deeper traffic insights.

You can find the complete code here.

Alex is an experienced SEO consultant with over 14 years of working with global brands like Montblanc, Ricoh, Rogue, Gropius Bau and Spartoo. With a focus on data-driven strategies, Alex helps businesses grow their online presence and optimise SEO efforts.

After working in-house as Head of SEO at Spreadshirt, he now works independently, supporting clients globally with a focus on digital transformation through SEO.

He holds an MBA and has completed a Data Science certification, bringing strong analytical skills to SEO. With experience in web development and Scrum methodologies, they excel at collaborating with cross-functional teams to implement scalable digital strategies.

Outside work, he loves sport: running, tennis and swimming in particular!